On December 6, 2014, researchers from the Max Planck Institute for Intelligent Systems in Tübingen, Germany, presented a study entitled MoSh: Motion and Shape Capture from Sparse Markers. Their research, published in the SIGGRAPH Asia 2014 edition of ACM Transactions on Graphics, details a new method of motion capture that allows animators to record the three-dimensional motion of human subjects and digitally "retarget” that movement onto other body shapes.

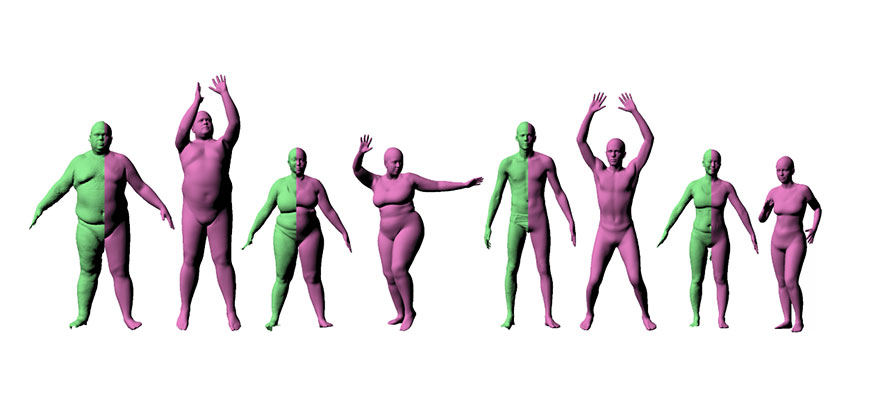

As opposed to current motion capture(mocap) technology, MoSh doesn't require extensive hand animation to complete the mocap process. Instead, it uses a sophisticated mathematical model of human shape and pose to compute body shape and motion directly from 3D marker positions. This innovative technique allows mocap data to be transferred to a new body shape, no matter the variation in size or build. To illustrate the capabilites of the system, its inventors captured the motion of a sleek, female salsa dancer and swapped her body shape with that of a hulking ogre. The motion transferred smoothly between the two, resulting in an unexpectedly twinkle-toed block of a beast.

Devised by a team of researchers under the direction of Dr. Michael J. Black, MoSh is intended to simplify the creation of realistic virtual humans for games, movies, and other applications — while at the same time, reducing animation costs. According to Naureen Mahmood, one of the co-authors of the study, MoSh throws the gates to motion capture wide open for projects of all sizes and budgets. “Realistically rigging and animating a 3D body requires expertise, " said Mahmood. "MoSh will let anyone usemotion capture data to achieve results approaching professional animation quality.”

Because MoSh does not rely on a skeletal approach to animation, the details of body shape — such as breathing, muscle flexing, fat jiggling — are retained from the mocap marker data. Current methods discard such details and rely on manual animation techniques to recreate them after the fact.

“Everybody jiggles,” said Black. “We were surprised by how much information is present in so few markers. This means that existing motion capture data may contain a treasure trove of realistic performances that MoSh can bring to life.”

For more details on MoSh, download the full paper from the ACM Digital Library. Until November 2015, MoSh: Motion and Shape Capture from Sparse Markers is available to the public at no cost through open access (link below).

Open access to MoSh: Motion and Shape Capture via ACM SIGGRAPH

Direct link to MoSh: Motion and Shape Capture from Sparse Markers in the Digital Library (requires ACM SIGGRAPH membership, ACM membership or a one-time download fee to access)

Open access to additional conference content from SIGGRAPH 2014 and SIGGRAPH Asia 2014