News

Learn About ACM SIGGRAPH Chapters at SIGGRAPH Asia

Are you excited about SIGGRAPH Asia and computer graphics? Don't want to wait until the next conference to get your CG fix? Then consider joining your local ACM SIGGRAPH chapter!

The goal of ACM SIGGRAPH chapters is to organize events in local communities and bring SIGGRAPH-style content to people in cities across the globe who share an interest in CG. Each chapter encompasses an entire city (or university, for student chapters) and is run by volunteers. Volunteering in your local chapter is a rewarding way to meet other computer graphics enthusiasts in your community, stay informed about the latest developments in the field, and to keep the SIGGRAPH spirit alive from conference to conference.

No chapter in your city? Why not start your own? At SIGGRAPH Asia, the Professional and Student Chapters Committee (PSCC) will be available to help you get started. Just stop by the chapters booth and schedule a meeting. The PSCC will explain the chapter chartering process in detail, and provide ideas on how to build support within your community.

THE PSCC will also be hosting special sessions at SIGGRAPH Asia. If you plan to be in Shenzhen this December, don't miss this opportunity to connect with the PSCC!

Professional and Student Chapters Committee Sessions at SIGGRAPH Asia 2014:

- Managing your SIGGRAPH Chapter (Thursday, 04 December 11:00 – 12:45 Chrysanthemum Hall, Level 5)

- Getting to Know ACM SIGGRAPH Chapters (Friday, 05 December 09:00 – 10:45 Chrysanthemum Hall, Level 5)

ACM SIGGRAPH Chapters will be holding meetings with people interested in volunteering to serve on the Professional and Student Chapters Committee. We hope to identify potential candidates that will work with us to build a support network for ACM SIGGRAPH Chapters in Asia. If you are interested in volunteering, please contact Mashhuda Glencross at mglencross@siggraph.org.

About SIGGRAPH Asia 2014:

Over four days this December, Asia’s largest computer graphics event, SIGGRAPH Asia 2014, will be hosted in Shenzhen, China. SIGGRAPH Asia 2014 is dedicated to presenting the most cutting edge graphical achievements and product developments across a range of fields. Over 7,700 attendees from over 60 countries are expected, making the conference and exhibition the largest and most respected computer graphics conference in Asia. SIGGRAPH Asia 2014 will present a myriad of experts and exhibits in a range of fields, including hardware and software, film and game production, as well as research and education. For more information, or to register for the conference, visit the SIGGRAPH Asia 2014 website.

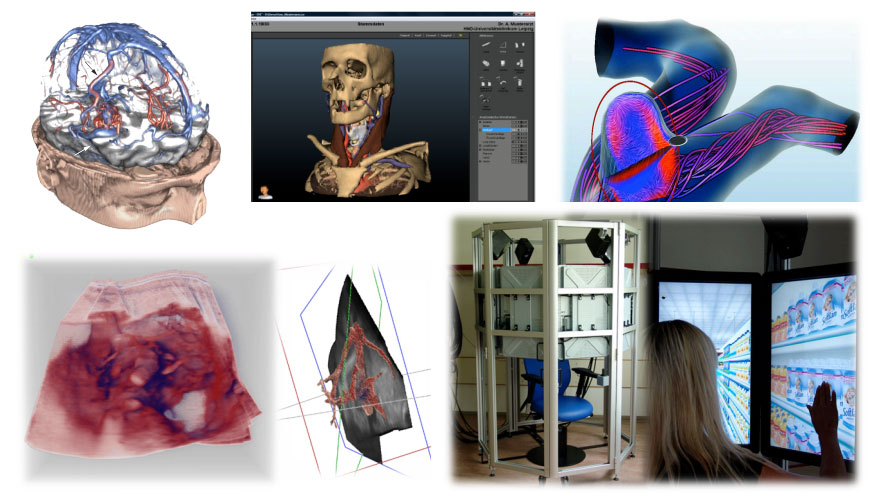

Eurographics Celebrates Computer Graphics in Medicine

Every two years, Eurographics organizes a competition called the Dirk Bartz Prize for Visual Computing in Medicine. The biannual award serves to highlight the achievements of those who employ computer graphics for medical applications, and to encourage further research and development in the field.

The marriage of computer graphics and medicine has led to numerous, highly impactful advances in training, treatment and medical understanding. Those who work in the field of visual computing for medical applications may not be as well known to the general public as entertainment industry graphics professionals, but many have been instrumental in the creation of medical advances that have touched the lives of millions.

Past recipients of the Dirk Bartz Prize for Visual Computing in Medicine include:

- High-Quality 3D Visualization of In-Situ Ultrasonography (Ivan Viola, Åsmund Birkeland, Veronika Solteszova, Linn Helljesen, Helwig Hauser, Spiros Kotopoulis, Kim Nylund, Dag M. Ulvang, Ola K. Øye, Trygve Hausken, and Odd H. Gilja)

- Effective Visual Exploration of Hemodynamics in Cerebral Aneurysms (Mathias Neugebauer, Rocco Gasteiger, Gábor Janiga, Oliver Beuing, and Bernhard Preim)

- OCTAVIS: A Virtual Reality System for Clinical Studies and Rehabilitation (Eduard Zell, Eugen Dyck, Agnes Kohsik, Philip Grewe, David Flentge, York Winter, Martina Piefke, and Mario Botsch)

- VERT: A Virtual Environment for Radiotherapy Training and Education (James W. Ward, Roger Phillips, Annette Boejen, Cai Grau, Deepak Jois, Andy W. Beavis)

- The Tumor Therapy Manager and its Clinical Impact (Ivo Rössling, Jana Dornheim, Lars Dornheim, Andreas Boehm, Bernhard Preim)

- AVM-Explorer: Multi-Volume Visualization of Vascular Structures for Planning of Cerebral AVM Surgery (Florian Weiler, Christian Rieder, Carlos A. David, Christoph Wald, Horst K. Hahn)

Researchers and developers able to demonstrate a specific benefit that has resulted from the use of their computer graphics technology in a medical application are invited to submit their work for consideration for the 2015 award. The top three winning entries of the Dirk Bartz Prize for Visual Computing in Medicine 2015 will each receive a prize, and their submissions will be published in the "Short Papers and Medical Prize Awards" digital media proceedings. Examples of entries include the use of new data visualization techniques, interaction methods or virtual or augmented environments.

Submissions for the 2015 Dirk Bartz Prize close on January 9, 2015. For more information on the Dirk Bartz Prize, or to submit your work for consideration, visit the Eurographics 2015 website.

Virtual Reality Conference Hits China

The 13th ACM SIGGRAPH International Conference on Virtual-Reality Continuum and its Applications in Industry (VRCAI 2014) will take place from November 30 to December 2, in Shenzhen, China, just before SIGGRAPH Asia 2014.

The Virtual Reality Continuum (VRC) is concerned with the consistence of the virtual world and the real world. Spanning across next-generation communication environments like Virtual Reality (VR), Augmented Virtuality (AV), Augmented Reality (AR) and Mixed Reality (MR), VRC looks at the way we define and interact, with and within, our virtual worlds. VRCAI 2014 is a forum for scientists, researchers, developers, users and industry leaders in the international VRC community to come together to share experiences and exchange ideas about this rapidly-growing field.

VRCAI 2014 will present papers and posters from academia and industry that explore technologies and applications in the Virtual Reality Continuum (VRC). VRCAI 2014 will focus on the main themes of VRC fundamentals, systems, SQLIO, interactions, industry and various applications of the VRC.

Early bird registration for VRCAI 2014 is available until October 31. For more information, visit the VRCAI 2014 website.

Córdoba to Host Conference on Human-Computer Interaction

The Third Argentine Conference on Human-Computer Interaction, Telecommunications, Informatics and Scientific Information (HCITISI 2014) is set to take place in Córdoba, Argentina, from November 13 to 14.

HCITISI is a forum for educators, researchers and practitioners involved with human-computer interaction, telecommunications, informatics and scientific information. Attendees will be exposed to a variety of papers, workshops, demos and posters — all geared toward disseminating the latest breakthroughs and immediate trends in each of the disciplines represented at the conference.

Speakers at HCITISI include Prof. Pedro Luna (ex Chancellor, Santiago del Estero University), Prof. Rosanna Costaguta (National University of Santiago del Estero), Prof. Silvia Poncio (Universidad Abierta Interamericana), Prof. Fernando Dufour (National University of La Matanza) and Prof. Francisco V. C. Ficarra.

For more information or to register for the conference, visit the HCITISI 2014 website.