In his 2013 State of the Union address, President Obama made room in his speech to talk about a subject that some may have found an odd choice for a presidential address: 3D printing. His comments on 3D printing were positive, noting that the technology "has the potential to revolutionize the way we make almost everything." A little over a year later, President Obama's prediction was proven true — and in a rather personal way.

This past June, computer graphics experts from the Smithsonian and the USC Institute of Creative Technologies landed at Pennsylvania Avenue to create a 3D scan of President Obama. No just any 3D scan, but the highest resolution digital model ever created of a head of state.

Günter Waibel, Director of the Smithsonian 3D Digitization Program Office, led the team that conducted the scan. In the White House's behind-the-scenes video of the project (below), Waibel is seen brandishing a life-sized plaster mask of Abraham Lincoln's face. "The inspiration for creating [Obama's] portrait," Waibel explained in the video, "comes from the Lincoln life mask in our National Portrait Gallery. Seeing the [Lincoln mask] made us think — what would happen if we could do that with a sitting president, using modern technologies and tools?"

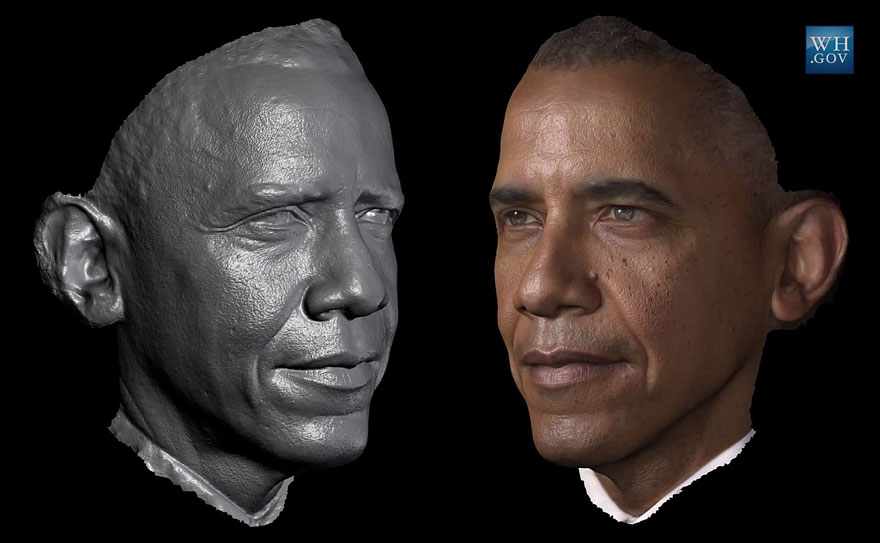

"This isn't an artistic likeness of the president," said Adam Metallo, 3D Digitization Program Officer at Smithsonian, as he sat before a computer displaying a seemingly perfect digital copy of President Obama's head. "This is actually millions upon millions of measurements that create a 3D likeness that we can now 3D print — and make something that's never been done before."

The models of President Obama were used to 3D print a life mask and a presidential bust that was unveiled at the first-ever White House Maker Faire in June. The data and printed models will be added to the Smithsonian’s National Portrait Gallery collection.

Tom Kalil, Deputy Director for Policy for the White House Office of Science and Technology, shared his response to the scan in the White House making-of video. Kalil was enthusiastic about the project, especially how it served to highlight the value of 3D scanning and printing technologies. "The President getting his likeness scanned, as cool as that is, is also about a broader trend that's going on," he said. "The third industrial revolution … the combination of the digital world and the physical that is allowing students and entrepreneurs to be able to go from idea to prototype in the blink of an eye."

Paul Debevec, Associate Director of Graphics Research at USC ICT, and former ACM SIGGRAPH Vice President, was part of the team that conducted the landmark presidential scan. "Ten years ago, it was barely possible to think this could be done," said Debevec. Since 2001, Debevec has lead the Light Stage project at USC ICT, a series of increasingly advanced scanning and lighting rigs that allow users to collect a tremendous amount of geometry and illumination data from human subjects. The data gathered with the Light Stages is used to create believable digital representations of the subjects scanned.

"We used similar Light Stage technology to the polarized gradient illumination scanning process used for the Digital Emily project shown at SIGGRAPH 2008, and the Digital Ira project shown at SIGGRAPH 2013," said Debevec. "But we had to quickly create a mobile rig which could ship to Washington, squeeze through doorways, and scan very quickly. So we put 50 of our custom light sources and fourteen cameras from our lab’s Light Stage X system onto a rolling gantry, and framed the cameras so tightly so that if the subject was in frame, the subject was in focus."

Debevec was impressed with the results produced by the mobile Light Stage, but at the time, he may have been too busy enjoying the experience of spending an afternoon with the President of the United States to notice. "It was a great honor to be invited to the team by Günter Waibel," said Debevec, "and President Obama was not only a great subject — but he was genuinely interested in the technology."

More about the scanning and modeling process from Paul Debevec:

"Jay Busch and Xueming Yu from USC ICT wired and programmed the light sources. Graduate student Paul Graham programmed the scanning sequence, and Graham Fyffe designed a simplified set of lighting patterns which allowed us to perform a scan in just over a second. The Smithsonian’s Vince Rossi and Adam Metallo then used hand-held structured light scanners to record the rest of President Obama’s head and shoulders for the 3D printed bust. Back at the Smithsonian offices, Graham Fyffe solved for the 3D shape of the face using techniques from our Ghosh, et al. SIGGRAPH Asia 2011 paper “Multiview Face Capture using Polarized Spherical Gradient Illumination” at better than a tenth of a millimeter resolution, along with diffuse, specular, and surface normal maps from the polarized lighting.

"The Smithsonian team integrated the Light Stage model of the face with their model from the structured light scans and, with generous support from Autodesk and 3D Systems, printed the model life-size in 3D using Selective Laser Sintering to create the 3D Presidential Portrait.

"Being part of the SIGGRAPH community inspires us to do our very best work and to push our techniques further every day, and without that — I don’t think we could have played the role we did in this project. "