LET’S DRAW!: NEW DEEP LEARNING TECHNIQUE FOR REALISTIC CARICATURE ART

Data-driven method leverages deep learning AI for photo-to-caricature translation

TOKYO, Japan, 16 October 2018 – Caricature portrait drawing is a distinct art form where artists sketch a person’s face in an exaggerated manner, most times to elicit humor. Automating this technique poses challenges due to the amount of intricate details and shapes involved and level of professional skills it takes to transform a person artistically from their real-life selves to a creatively exaggerated one.

A team of computer scientists from City University of Hong Kong and Microsoft, have developed an innovative deep learning-based approach to automatically generate the caricature of a given portrait, and to enable users to do so efficiently and realistically.

“Compared to traditional graphics-based methods which define hand-crafted rules, our novel approach leverages big data and machine learning to synthesize caricatures from thousands of examples drawn by professional artists,” says Kaidi Cao, lead author, who is currently a graduate student in computer science at Stanford University but conducted the work during his internship at Microsoft. “While existing style transfer methods have focused mainly on appearance style, our technique achieves both geometric exaggeration and appearance stylization involved in caricature drawing.”

The method enables users to automate caricatures of portraits, and can be applied to tasks such as creating caricatured avatars for social media, and designing cartoon characters. The technique also has potential applications in marketing, advertising and journalism.

Cao collaborated on the research with Jing Liao of City University of Hong Kong and Lu Yuan of Microsoft, and the three plans to present their work at SIGGRAPH Asia 2018 in Tokyo from 4 December to 7 December. The annual conference features the most respected technical and creative members in the field of computer graphics and interactive techniques, and showcases leading edge research in science, art, gaming and animation, among other sectors.

In this work, the researchers turned to a well-known technique in machine learning, Generative Adversarial Network (GAN), for unpaired photo-to-caricature translation to generate caricatures that preserve the identity of the portrait. Called “CariGANs”, the computational framework precisely models geometric exaggeration in photos (shapes of faces, specific angles) and appearance stylization (look, feel, pencil strokes, shadowing) via two algorithms the researchers have labeled, CariGeoGAN and CariStyGAN.

CariGeoGAN only models the geometry-to-geometry mapping from face photos to caricatures and CariStyGAN transfers the style appearance from caricatures to face photos without any deformation to the geometry of the original image. The two networks are separately trained for each task so that the learning procedure is more robust, notes the researchers. The CariGANs framework enables users to control the exaggeration degree in geometric and appearance style by dragging slides or giving an example caricature.

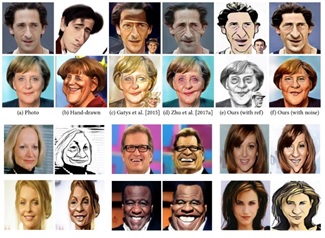

Cao and collaborators conducted perceptual studies to evaluate their framework’s ability to generate caricatures of portraits that are easily recognizable and not overly distorted in shape and appearance style. For example, one study assessed how well the identity of an image is preserved using the CariGANs method in comparison to existing methods for translating caricature art. They demonstrated, through several examples, that existing methods resulted in unrecognizable caricature translation. Study participants found it too difficult to match the resulting caricatures with the original subjects because the end results were far too exaggerated or unclear. The researchers’ method successfully generated clearer, more accurate caricature depictions of portrait photos, as if they were hand drawn by a professional artist.

Currently, the focus of this work has centered on caricatures of people, primarily headshots or portraits. In future work, the researchers intend to explore beyond facial caricature generation into full body or more complex scenes. They are also interested in designing improved human-computer interaction (HCI) systems that would give users more freedom and user control on the machine learning-generated results.

For the authors’ paper, titled “CariGANs: Unpaired Photo-to-Caricature Translation”, and supplementary material, visit the team’s project page.

To register for SIGGRAPH Asia, and for more details of the event, visit http://sa2018.siggraph.org. For more information on the Technical Papers Program, click here.